Become a better software tester by looking within, instead of outside the box

by Philip Lew, XBOSoft CEO

Philip Lew

As an avid cyclist and triathlete, I’m keenly aware that putting in more miles doesn’t necessarily add up to better results. The same goes for software testing. Because of testing biases we are unaware of, we either work harder, or less effectively, than we should.

It’s not how much work you put in, it’s the thought that goes behind it. If we really want to increase our testing capabilities, then we should be analyzing our ways of thinking, and identifying the strengths and weaknesses in our thinking.

We all have cognitive biases we’re not consciously aware of. Why do you find certain types of defects and not others? Why are your estimates always low, and therefore forcing you to work overtime? And how do our biases take shape in the agile testing process? Is it different from what happens in more traditional processes?

Daniel Kahneman’s book, “Thinking Fast and Slow,” identifies many cognitive biases that impact our ability to make good decisions. And when it comes to software testing, one of the most common questions (that crucial decisions depend on) you may be asked is:

“Is the software ready for release?”

Or in another form:

“Are we done testing?”

Sometimes we depend on our intuition, and sometimes we think things through. The only problem is knowing when to use your intuition — and when not to. When you make a decision about whether the software is ready for release, what biases are you aware of in your thinking patterns?

Three Cognitive Biases That Manifest as Testing Biases

Our hard-wired cognitive biases can often be reflected in our work as testing biases. In my experience, the top three cognitive biases that we have that directly correlate to our ability to excel (or not) as software testers are:

Reacting Versus Responding

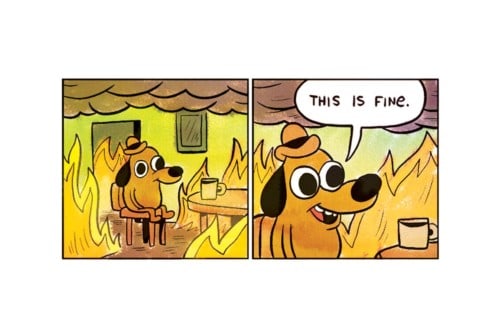

Many times we react to information or have a quick impression about something and use our intuition to make a snap decision. We may see a threat and react quickly and may be under the illusion that we are under control and making a quick decision based on our experience. However, often times we can be wrong and would be better off to slow down and think.

We often make a snap judgment about someone we meet because they look similar to another person we knew. Or a doctor may see you have a fever and immediately say, “You need to take some aspirin.” In reality, although the decision is efficient and quick, it may not be the best decision.

In software testing, sometimes we may examine our automation test results and make a quick assessment that the software is good to go just based on the percent passed. What other information should you be analyzing carefully before making this decision? Among the major testing biases, this one can be offset by examining whether you are responding to a situation or reacting — a test you can use to improve the rest of your life!

Effort Expended

Sometimes we may work very hard at something and finally come up with a result, but get stuck to or attached to that result. In other words, because of the time and effort we put into it, we may trust that result even if it is contradictory with other information just because we are invested in it.

In everyday life, this manifests itself in many ways. For instance, you’ve put lots of effort into a business and, despite all the signals that the business is failing, you don’t listen. Rather than shut it down, you keep fighting when all the signs indicate you are wasting your time and money. Most entrepreneurs fail, but somehow you think your situation is different.

In a software tester’s life, perhaps you worked hard on testing a certain function or user story, or put a lot of effort into the tests that you’re going to give to a developer for Test Driven Development. But with your focused effort in one part of the software, you may have overlooked other parts of the software that could be more defect-laden.

Natural Optimism

Many people have a natural tendency to be optimistic. Our optimistic bias leads us to take on risks and challenges while overestimating our chances for success.

According to Forbes, 80 percent of businesses fail in the first 18 months. Yet surveys indicate that entrepreneurs give themselves a 60 percent chance of success. Social and economic pressures favor overconfidence. Who wants to hang out with someone who is “gloom and doom?” Yet this bias and societal pressure lead to an illusion of control.

While we make these optimistic decisions and estimates, this bias is precisely the reason why so many of our tasks take longer than we expected. Our natural optimism tends to also cloud what information we consider in making decisions. It leads us to consider the most recent positive supportive evidence that comes to mind, rather than consider negative or older information that is not so easy to remember.

The next time you’re making an estimate about how long a task will take, consider all past data points in doing similar tasks, not just what you think it will take based on the current information.

Getting back to how to become a better software tester, I think it’s good to look deeper inside ourselves and examine our natural cognitive biases. By examining these testing biases, we will be able to see where we are making decisions and estimates that have a high probability of being wrong. You can think of this as another method of defect prevention!

Gerie Owen

Upcoming Webinar

I will be exploring the fascinating topic of how cognitive biases become testing biases in more depth with Gerie Owen, a self-described Test Architect who has spent considerable time examining testers’ biases — as well as her own. The webinar will be July 11. Her topic: How Did I Miss That Bug? Managing Cognitive Bias in Testing.

Thanks Philip for a very interesting article, this is indeed something we need to keep reminding our self.